fixed ftp upload to use a FTP URL to get user/pass/host/path for upload

Monthly Archives: May 2012

Use VirtualWisdom Alarms to Schedule Daily Tasks

The VirtualWisdom Service part of the VirtualWisdom Platform doesn’t necessarily do everything: our customers’ SANs differ in the small details as well as the larger ones, necessitating VI Services to help with some customization. In many cases, we set things up to run daily, such as grabbing zone info to convert to Nicknames, or converting Nicknames to UDCs and Filters.

In some cases, customers cannot edit the Windows Scheduler to run these, and do not have a UNIX-like system with an available scheduler. This can be due to access, or corporate policy. I wanted to share a workaround for this situation: (mis-)use the Alarm system to do so.

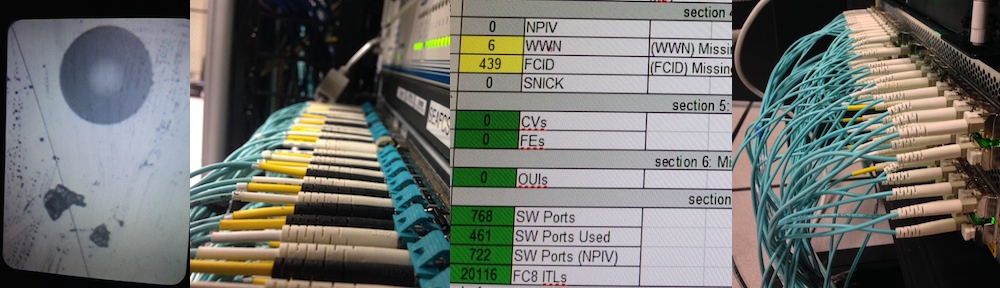

The following image may explain more efficiently than a walk-through:

As you can see by the name, the alarm policy should only be applied to one ProbeSW — to one SAN Switch.

The alarm will trigger when any data flows — you can see the trigger set to “> 0, 1 matching interval in domain of 1 interval”, and all it does it runs an external program. The configuration of that external program is also opened in the editor, and you can see that it simply runs a script (using full pathname).

The re-arm of that Alarm Policy Rule is “MB/sec != -1″. Because MB/sec can only go down to zero, “-1″ is impossible, so this rule will always match. The trick is that this has to match one triggered, and has to match for 288 intervals (288 x 5 minutes = 24 hours). Effectively, this is a logic statement that says “don’t run more often than every 24 hours”.

This Alarm Policy Rule effectively runs immediately after the Portal Service is restarted or the Alarm Policy is applied to a switch, and will run every 24 hours thereafter (understanding that 288 might need to be 287 to avoid a 5-minute skew daily).

The “meat” or complexity here would be in the BAT file: the Alarm uses the “External Script” action to run our batch file daily. This avoids configuring the OS Scheduler, but at a cost of not being able to choose the exact time. Additionally, the BAT file executes with the permissions of the Portal Server, which typically cannot view Network Shares and other remote resources.

Move Your VirtualWisdom Backups into Your Backed-Up Space

VirtualWisdom has an easy backup system: quite simple to configure for backups as easily as any scheduled event: as frequently as daily, at any time, and with multiple schedules possible, re-using the same configuration for each. The issue of a new filename every time — chosen by VirtualWisdom to avoid overwriting a good backup with one that might run into some exception and be incomplete — often causes a new backup file each week to be present, and no simple method of aging-out old backups.

The Post-Backup Script in the Backup Service Configuration runs after every backup, if activated: it simply executes a script with a few parameters. This allows the VirtualWisdom Administrator a certain flexibility in writing any manner of script that can run as the VirtualWisdom process to accomplish the automated moving around of backup files — or, logically, any task, even unrelated to the backup.

As defined by the underlying database vendor, our database files need to remain untouched by backup and antivirus processes which tend to lock the files for long periods. Any locked data file tends to block database writes, slowing throughput, and risking corruption of the data. This requirement also means that backups are typically outside of corporate backup tools and policies; the risk of a backup not being preserved in a catastrophic filesystem exception is clearly significant. Even though VirtualWisdom only handles measurements and data about the data, it does not handle data itself, and does not form a critical path in data I/O, loss of VirtualWisdom is loss of measurement and analysis tools which may be critical to resolve storage issues. Clearly we want the backup for VirtualWisdom to be safely archived.

In this article, I’d like to share one example of how successful backups can be moved into the filesystems covered by corporate backup policies, replacing past backups to avoid ever-increasing disk usage. My content here on the Virtual Instruments SAN Best Practices blog tends to be of a technical “how-to” nature; we hope this article may help define a customer’s backup config, giving safety to the data so that focus can return to the performance and availability of the SAN.

Overview

The basic backup process is a sequence such as:

- lock the database (database becomes read-only)

- quickly duplicate all database files

- unlock the database and let processing continue

- aggregate the backup files into a single file, optionally compressing

The feature we want to exploit to improve this process is the optional “Execute the following command upon completion” entry on a Backup Service Configuration to move the backup file to where it should be. In most cases, “where it should be” is a disk covered by corporate backup processes with sufficient space to hold the backup, compressed, accounting for organic growth (database backup grows as number of monitored ports, VMs, ESXs, and ITLs increase over time).

For our example, that is the “X” drive. Bear in mind that the backup script runs as the VirtualWisdom process, which runs as a service hence has no access to network drives. In our example, the “X” drive might even be a SAN LUN: even though we recommend that the disk not be on a SAN LUN due to the risk of being affected by the performance problems and exceptions that VirtualWisdom is trying to help users track and resolve, the backup may be on a SAN LUN because delays in the archived backup do not directly affect performance of the VirtualWisdom platform.

Example Backup Service Configuration

Typically, your backup schedule would look like the following: (except that my work server is small, so I have disabled mine by unchecking the checkbox beside the scheduled time)

… with a Backup Service Configuration such as:

Improved Backup Service Configuration

Instead of merely doing the backup, we can use the “post-backup script” to do the work for us. The “Post-Backup Script” is the name I’ve started using for the script that gets listed in the box for “Execute the following command upon completion”. An example script may be as simple as the following:

As we can see, when the second parameter given to the script (“%2“) is a 1, then the filename given as the first parameter (“%1“) is moved to the consistent filename X:\Backups\VirtualWisdomBackup.zip. The X:\ drive would be within normal backup policy, so routine backups would protect the database archive.

This batch file is run by entering it as a “post-backup script” as follows. NOTE: where possible, use a full pathname to ensure the script is found, and it’s the correct script.

As we can see in this Backup Service Configuration, we have enabled the “Execute the following command upon completion” checkbox, and listed our script as the script to run. The two parameters are selectable with the “Insert” box, or may be directly typed free-form.

When the script runs after a backup is complete, the $BACKUP_STATUS$ is replaced by a 1 or a 0 depending whether the backup was successful — and as noted above, if this value is “1″, the working file is moved; otherwise, it’s untouched. Perhaps an enhancement might be to raise an alert that the backup failed (VirtualWisdom logs backup failures in the Portal log, but makes no other indication), or to delete or move aside a failed backup as well for analysis and fault-resolution.

When the backup is complete, and a new backup file is created named after the time that the backup started: backup - yyyy-mm-dd-hh-MM.zip, where yyyy is the year, mm is the month (zero-padded), dd is the day (zero-padded), HH is the hour (24-hour time), MM is the minutes (zero-padded) — yes, this is intentionally very close to ISO8601 that is the basis for RFC3339, HTML5, and XML date format. With a new pseudo-random always-incrementing filename, new backups will never overwrite previous backups, but they are difficult to track down. The $BACKUP_FILE$ token is replaced by this filename, allowing the post-backup script to work with the correct filename every time.

Of course, in order to summarize the underlying behaviour, we do change the name of the schedule itself, but it’s not critical:

In most articles, we include complete examples, but the development and explanation of this relatively simple example is a complete example. Of course, changes will have to be made for each individual unique environment. Most backups do not run to the C:\ drive because there would not be sufficient space; rather, most configurations have a D:\ drive or E:\ drive for data, and that drive is used as a working drive during backups.

Quickly Create Filters for VirtualWisdom UDC Values

The UDC capability in VirtualWisdom enables quite a powerful ability to group fabric entities based on a number of parameters, but creating the filters to use a large UDCs can be a bit cumbersome. UDC is VirtualWisdom’s User-Defined Context, allowing a virtual metric value to be defined within summaries, calculated based on powerful expressions.

Typically, UDCs are used to separate and group entities such as:

- Physical Datacenter to filter physical-layer alerts (such as CRCs) to the correct ticket queue for inspection

- Business Unit (BU) UDCs to filter performance alerts (such as response-time) against Business-Unit -specific thresholds (i.e. Oracle requires 12ms response time, but the NFS filer accepts 20ms)

- Port/Blade/ASIC calculations

- Grouping a SuperDome’s ports or an Array’s ports for filtered reports

As well, UDCs are used for “what-if” calculations: What if the SCSI traffic from a certain HBA was zoned to a different storage port, which it overload the Queue and link speed? What-if UDCs are an extremely powerful tool to prove capacity based on historical use, but somewhat out-of-scope for this article.

My content in Virtual Instruments’ SAN Best Practices tend to be of the how-to nature; in this article, I’d like to share a simple method of creating all the “X = Y” filters for a specific UDC programmatically, which can reduce the time-to-value in new installs or changing environments. When linked with other generation how-to articles (such as nickname collection, or generating UDC by transform), this can further reduce the effort of managing a very large SAN.

Process Overview

For this process, our workflow will look like the following:

As you can see, the starting file “UDCExport.udc” can be either exported from the VirtualWisdom Portal itself, or can be generated by other means. The file is converted using xsltproc using a “program” or “script” UDC2Filter.xsl, resulting in Filters.xml which can be imported manually to VirtualWisdom.

Overview

UDC Files in VirtualWisdom are a specific schema of XML file; as such, standard easily-available license-free tools such as xpathget, xmllint, or xsltproc can be used to interrogate, validate, or convert the starting XML to a different format, even generating CSV or simple text in the process.

XSLT is the XML Stylesheet Translations; XSL is a Stylesheet for XML, similar to CSS describing the stype of a free-form HTML page. In essence, XSL can be considered an CSS in XML, but rather than markup content — such as type facing and style for large printed content — XSL can also transform and convert content. XSLT is the act of using XSL markup in a standalone processor (xsltproc) to create content based on XML content. In many cases, this is XML generating XML, but can be used to write TSV, CSV, JSON, etc.

VirtualWisdom Filters are exported as another schema of XML file, and can be similarly manipulated by standard XML tools. Even though this XML is a text-based format, trying to edit it with a text editor can be prone to human-error. We can read XML for debugging (xmllint -format), but as the size of the content gets larger, to use it as thought XML is an opaque binary format, which again leads us to the free tool “XSLT”.

In our case, a specific XSLT file is used to manipulate a UDC definition into a list of Filter definitions: UDC2Filter.xsl guides the conversion of UDC Values to Filters which match them.

Running the Script

xsltproc is available on most non-Windows platforms as an installable RPM, SSO, .deb, .pkg, or similar pre-packaged open source project; on Windows, it can be installed per SageHill’s Instructions; a file xsltproc.zip is easily obtained from any VI FAE to accelerate your install process.

Running it is quite simple:

xsltproc.exe -o Filters.xml UDC2Filter.xsl UDCExport.udc

There’s no output: all generated content goes directly to the output filter file.

Complete Example

In order to show how the full process, in case I’ve left out some details or some details seem implied, this is a full example based on data in our demo databases (which we use for demos and training):

Given the following UDC:

We export this UDC to Application_SW.udc, run the XSL Transform as follows:

xsltproc.exe -o Application_SW_Filters.xml UDC2Filter.xsl Application_SW.udc

The result we get in Application_SW_Filters.xml looks like this:

Clearly this example is only a few filters, no big deal. The benefit comes in when there are more than a half-dozen to build (recently, a 212-value UDC was tested). As well, if the UDC is edited (perhaps based on automated processes) then the administrator must go through and check that every value has a filter.

Unfortunately, there is no schedule-action for Filter import.

Use LUN Nicknames in VirtualWisdom to Identify VLUN SymmDevice Names

When some customers look at the output of our Hardware Probes such as the 8g FC8, and they’re confirming that all their Oracle transactions are meeting the SLA of 8ms, the LUNs they see are a bit unusual. They’re used to seeing LUNs such as LUN32, LUN33, but VirtualWisdom shows them LUNs like 17652, and they just don’t match up. This hinders the utility of the data, and may reduce the confidence they have in the data itself. When data drives decisions, we need accuracy and we need to be as easy to use as we can based on what we have available on the FC link.

The truth of the mismatch is that the devices involved actually convert the LUN numbers: where Virtual Wisdom shows you “17652″, that’s actually the LUN “on-the-wire”. The actual LUN in the SCSI frame is that large number, but vendor-specific tools convert it to manageable, familiar numbers — which can make the actual LUN appear “wrong”. Although it’s very simple to say “VirtualWisdom isn’t making the same conversion as your vendor-specific tools”, we’d rather be more helpful. When you have a problem on your SAN, “VirtualWisdom doesn’t support…” offers no help towards fixing the problem. Rather than “17652″, we’d rather tell you “Symm Device 1E2B”, which — for a very important customer of ours — is a comfortable middle-ground in identifiers and terms for the LUNs.

So how can we get a usable label on those LUNs? How do we do the lookups for you so that in an emergency, you have the details you need, already dereferenced?

As you’ve seen in the articles I’ve posted, I’m a Field Application Engineer for Virtual Instruments, and making this conversion — plus automating it — are the challenges I enjoy working on and sharing. This how-to article shares how to get SymmDevice aliases mapped onto LUNs as nicknames in a scriptable method. Let’s dive in:

Process Overview

For this process, our flow will look like the following:

In this diagram, the “sysinq” command is used to generate the “sysinq.txt” file; that part of the process is quite dependent on the tools available on your servers, hence shown dotted.

Overview

We at Virtual Instruments have witnessed this odd mismatch in LUNs for some time, and initially we just said “Subtract 0×4000″, but that didn’t work precisely. In another instance, we found two servers with a very similar number, so the informal rule became “subtract 17506″, but we later found that this rule was unusable outside that customer. Recently, in very detailed discussions, a customer found the logic that gets them to their LUN Nicknames, as follows:

The “sysinfo” file looks like this: (mocked-up example from testcases)

disk 1071 8/0/12/1/0.140.0.54.6.1.4 sdisk CLAIMED DEVICE EMC SYMMETRIX

lunpath 1071 8/0/12/1/0.0x50060482d5123456.0x4392000000000000 eslpt CLAIMED LUN_PATH LUN path for disk6219

disk 1071 8/0/12/1/0.140.0.54.6.1.4 sdisk CLAIMED DEVICE EMC SYMMETRIX

disk 1071 8/0/12/1/0.140.0.54.6.1.4 sdisk CLAIMED DEVICE EMC SYMMETRIX

lunpath 1071 8/0/12/1/0.0x50060482d5123456.0x4392000000000000 eslpt CLAIMED LUN_PATH LUN path for disk6219

lunpath 1071 8/0/12/1/0.0x50060482d5123456.0x4392000000000000 online

The key part of this file is the line that says:

lunpath ...0x50060482d5123456.0x4392... disk6219

In this case, “50060482d5123456″ is the storage device’s WWN, and 0x4392 is the LUN (17298 in decimal) we detect as the actual LUN used in the SCSI exchange for disk6219.

The “syminq” file looks for a certain server like this: (again, mocked-up example from testcases)

/dev/rdisk/disk6219 R1 000190102037 5773 3701E8B000 2096640

/dev/rdsk/c23t1d2 R1 000190102037 5773 3701E8B000 2096640

/dev/rdsk/c43t1d2 R1 000190102037 5773 3701E8B000 2096640

/dev/rdsk/c133t14d5 R1 000190102037 5773 3701E8B000 2096640

The key part of this file is the line that says:

...disk6219 ...3701E8B...

That rdisk entry is as follows: (keep in mind, the content is replaced by bogus values: some common values may not align anymore)

- /dev/rdisk/disk6219 is a local device node on the host

- I’m not sure what “R1″ stands for

- 000190102037 is the Symmetrix serial number

- I’m not sure what “5773″ means (model number?)

- 3701E8B008 breaks down as:

- 37 — last two digits of serial 000190102037

- 0 — not sure

- 1E8B — the SymmDevice ID

- 000 — Director port number

- 2096640 (2^21 -512) means the LUN has capacity ~2TB

I don’t have official information, but it’s possible that 01E8B is actually “Symmetrix device 01E”, and “Director #8B”. 0x8b larger than 16 used to mean Processor B, but that seems a bit outdated now. The tools we use could easily give out 5-digit SymmDevice-XXXX values if we could confirm this numbering breakdown.

Essentially, the SysInfo2LUNNickname.awk script does the following:

- pre-loads a

syminqfile to provide “better” nicknames where possible - parses the

sysinfoto realize nicknames, substitutingsyminqresults where possible - Output the results as a new-style (VirtualWisdom v2.1 or later) nickname CSV

The resulting nicknames.csv has a series of lines such as:

"LUN","50060482d5123456","17296","disk6217"

"LUN","50060482d5123456","17297","disk6218"

"LUN","50060482d5123456","17298","SymmDevice-1E8B"

"LUN","50060482d5123456","17299","disk6220"

As you can see, where there is a “better” match, “SymmDevice-1E8B” is used; otherwise, “diskXXXX” is still there. At this particular customer, “1E8B” is an example of a common name for their LUNs rather than the 17298 reported by VirtualInstruments or the 0×4392 reported in hex.

For exceptionally large syminq files, because gawk.exe is loading up a large associative array, memory usage may temporarily peak; this has been tested on 500k – 1MB text files without visible adverse effects, but hidden memory usage such as interpreters’ associative arrays and implicit memory-management is something to be aware of.

Running the Script

gawk.exe -v SYMINQ=syminq.txt -f SysInfo2LUNNickname.awk sysinfo.txt > nicknames.csv

It’s that simple. In order to improve re-use, I collected this logic as the AWK script because I’ve never had portability problems with AWK except for the UNIX/Windows CR/CRLF/LF text line-endings debate.

The script is non-interactive, but prints the results, so the script is typically run with the output redirected to a file. Running it is very quiet, as you’d expect:

Complete Example

A complete example is difficult for this how-to because the files used are scattered on each server; collecting the sysinfo, and the syminq output from each server may be the more difficult part. My test example looks like the following (I always recommend using full pathnames for tools):

@echo off

cd \VirtualWisdomData\

REM following is all on one line but split here for easier reading

C:\UnxUtils\usr\local\wbin\gawk.exe

-v SYMINQ=\sandata\syminq01.txt

-f \sandata\SysInfo2LUNicknames.awk

\sandata\sysinfo > \VirtualWisdomData\DeviceNickname\nicknames.csv

Similar to previous Nickname-generation/derivation tutorials, this process generates a single nicknames.csv file. You could append this result to any other generated file for which you already have an import schedule, or create a new one. In order to be as complete as possible, I’ve included an import schedule example that should be surprisingly similar to the others (and similarly brief: the User Guide has more detail regarding Schedules)

- Views Application, Setup tab:

- “Schedules” page, roughly 5th item down:

- Create a new Schedule, with the action “Import WWN Nicknames”: (or, if you prefer, “Import LUN Nicknames”)

- …and configure it to use a new WWN Importing Configuration, as follows. NOTE we only use a local filename, all files are in the

\DeviceNicknamedirectory of your VirtualWisdomData folder:

Use UDCs to Collect Devices by Name Pattern in VirtualWisdom

Virtually all SAN devices that are zoned for traffic have names (in fact, if you have nicknames/aliases in your zone files, then you can directly convert zone info to nicknames). VirtualWisdom’s filtering capabilities allow you to restrict a Dashboard, Report, or Alarm Policy Ruleset to a specific datacenter or business unit, but often creating those UDCs can be cumbersome.

A recent customer created UDCs with 192 values across three metric sets, allowing him to group data by specific servers, storage, and virtualizers automatically; this “how-to” is intended to show how you can do the same.

Process Overview

For this process, we need only a set of nicknames; the simpler old-format nickname file looks like:

500604825D2E2144,"DMX1911_FA3AA"

2100001B329FE31D,"Billing44_HBA0"

10000000C741ABCD,"4241_7b1"

(Notice: WWN first, no spaces, optional quotes for safety)

Generate UDC Values from Nickname Pattern

Our flow for this process or pipeline looks like the following diagram:

The tool we use here is “awk”, or “awk.exe”, or “gawk.exe”; in Solaris, look for “nawk”. It’s on virtually every non-Windows system, Microsoft has a version in its tools for UNIX, or Google may help you find a copy. As well, UnxUtils has a version.

Awk is an interpreter, so needs a script or program, and for that, we use TransformUDC.awk which takes the following parameters:

| parameter | Meaning |

|---|---|

| COL | What column in the CSV input is the Nickname? (default: 1) |

| NAMEFCX_LINK | Name of the ProbeFCX::Link UDC |

| NAMEFCX_SCSI | Name of the ProbeFCX::SCSI UDC |

| NAMEFCX_SCSIINIT | Name of the ProbeFCX::SCSI UDC, matching Initiators only |

| NAMEFCX_SCSITARG | Name of the ProbeFCX::SCSI UDC, matching Targets only |

| NAMESW | Name of the ProbeSW::Link UDC (default: Transformed_UDC) |

| TRANSFORM | Transform (basically the ‘s/x/y/g’ in a “sed -e ‘s/x/y/g’” command) (default: remove last two _sect_sect: DC_Serv1_fcs0_SW12P121 –> DC_Serv1) |

| UDCDEFAULT | Default value for UDCs (default: Unknown) |

A simple command such as the following will generate our results:

gawk.exe -f TransformUDC.awk Nicknames.csv

In our nickname file, the older format (first and second variant up to VW-3.1) gave us the nickname as the second parameter, so let’s tell the script that the nickname is in column #2:

gawk.exe -v COL=2 -f TransformUDC.awk Nicknames.csv

The problem is: how do we want the UDC values defined? If you’ve used “sed” or “awk” before, there’s a basic replacement term that looks like s/dog/cat/g or gsub("dog","cat",$0) … this part really depends on your nickname format, but looking above, we have nicknames that look like:

500604825D2E2144,"DMX1911_FA3AA"

500604825D2E2145,"DMX1911_FA4AA"

500604825D2E7744,"DMX1927_FA3AA"

500604825D2E7745,"DMX1927_FA4AA"

500604825D2E7747,"DMX1927_FA6AA"

500604825D2E7748,"DMX1927_FA7AA"

500604825D2E774C,"DMX1927_FA13AA"

500604825D2E774D,"DMX1927_FA14AA"

2100001B329FE35D,"Billing43_HBA0"

2100001B329FE35E,"Billing43_HBA1"

2100001B329FE31D,"Billing44_HBA0"

2100001B329FE31E,"Billing44_HBA1"

10000000C741ABCD,"4241_7b1"

We see how, in this example, chopping off everything after the “_” gives names such as “Billing43″ and “DMX1927″. In see and awk, we would write: s/_.*$//g so we’ll use that as our transform. How can we test this?

Trim Quotation Marks

We could trim off the quotation marks around the second field using this: (“,” as field-separator, convert (“) to (), an empty replacement)

gawk -F, '{gsub("\"","",$2); print; }' Nicknames.csv

… unfortunately, we need to use quotation marks for the script, and then we need a bunch of “\” escape sequences, so it looks much more complex running it:

gawk.exe -F, "{gsub(\"\\\"\",\"\",$2); print; }" Nicknames.csv

… which looks like:

Truncate All After “_”

Based on the example above, we can now test whether our transform (“s/._*$//g”, or gsub(“._*$”,””,…) ) gives us the results we want, such as (notice: “print $2″, so we’ll only see the second field):

gawk.exe -F, "{gsub(\"\\\"\",\"\",$2); gsub(\"_.*$\",\"\",$2); print $2; }" Nicknames.csv

This means our transform works, so let’s use it in the script:

gawk.exe -v TRANSFORM="s/_.*$//g" -v COL=2 -f TransformUDC.awk Nicknames.csv

Unfortunately, “4241″ is not worthwhile to us because it’s only one matching name, so let’s trim that one off by saying “minimum of 2 matching names per UDC value”:

gawk.exe -v MIN=2 -v TRANSFORM="s/_.*$//g" -v COL=2 -f TransformUDC.awk Nicknames.csv

Finally, What do we want to call the UDC? The tool always generates a ProbeSW::Link UDC, and if unnamed, defaults to “Transformed_UDC”. The Name of the UDC is limited to 32 characters, and values themselves to 24 characters; the name of the UDC becomes the name of the “metric” or context that we are generating. Suppose while working at XYZ Cheese and Dairy Distributors, we want a UDC called xyz-SW-BizUnit (we need to use “_” rather than “-”):

gawk.exe -v NAMESW="xyz_SW_BizUnit" -v MIN=2 -v TRANSFORM="s/_.*$//g" -v COL=2 -f TransformUDC.awk Nicknames.csv

Let’s run this with a redirection (“>”) to store the results to a file:

gawk.exe -v NAMESW="xyz_SW_BizUnit" -v MIN=2 -v TRANSFORM="s/_.*$//g" -v COL=2 -f TransformUDC.awk Nicknames.csv > \VirtualWisdomData\UDCImport\xyz-UDCs.udc

Running this as a command in cmd.exe is relatively quiet because this is a non-interactive command. It tends to look like the following:

Importing this example, we see the following (you’ll note: the default has also been set using “-v UDCDEFAULT=Other”) :

Schedule UDC Import

Creating the schedule is relatively straight-forward: although there is some strong guidance in the VirtualWisdom User Guide, a complete example would like like the following:

- Views Application, Setup tab:

- “Schedules” page, roughly 5th item down:

- Create a new Schedule, with the action “Import UDC configurations”:

- …and configure it to use a new UDC Importing Configuration, as follows. NOTE we only use a local filename, all files are in the

\UDCImport\directory of your VirtualWisdomData folder:

The benefit here is that the UDC always replaces existing values without prompting. As well, after import, a UDC re-calculates values for both past summaries and new summaries. This allows you to “fix history” if your UDC is not quite correct the first time.