UDCs — User-Defined Context — can be very useful for showing the actual use, or membership of a device on the SAN, or for assigning a priority for alerting and thresholds. Often, these are hand-generated, but we do have methods of creating them from other content.

One customer has both: a UDC generated/converted from other content, and some manually-assigned content. Merging these would help him to assign filters and alerts as a single group, but the effort to merge it was looking excessive.

As you may recall, my content on Virtual Instruments Best Practices blog tend to be the how-to variety, and in this article, I’d like to share how to merge two UDCs programmatically, which can then be scripted in any automated collection scripts or tools you’re already using.

Merge, Then Cleanup

The general process we use for this is to merge the content first (using xmllint), then clean it up (using xsltproc) so that it’s back to sane, predictable UDC that is ready for routinely-scheduled import:

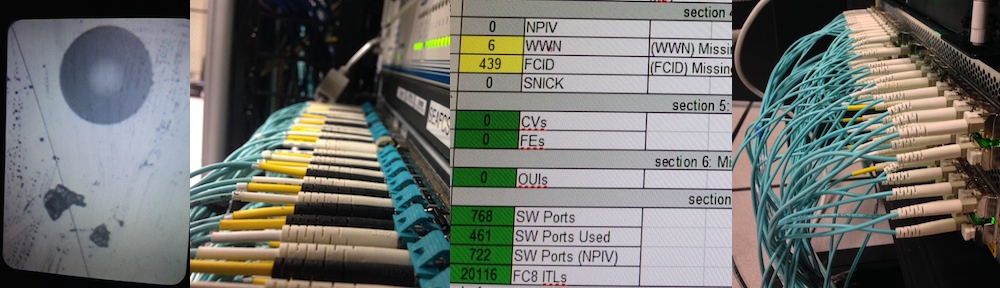

Notice in this image that the only things that change are in the upper box (“UDC Files”), which can be either manually-edited or autonomously-generated by filter or transform. As well, the result is a standard UDC from which we can generate filters or otherwise edit using XML tools.

As you can see, the tools used here are fairly standard; the only real development are the smaller scripts for each tool. UDCs are simply XML, and as such, quite easy to manipulate using standard XML tools.

Let’s break this down into the multiple steps.

Concatenation

The easiest way I found to concatenate two XML files was to use XInclude with an XPointer statement:

<?xml version="1.0"?>

<list xmlns:xi="http://www.w3.org/2001/XInclude">

<xi:include href="File1.udc" xpointer="xpointer(//list/*)"/>

<xi:include href="File2.udc" xpointer="xpointer(//list/*)"/>

</list>

In parts, this is really duplicates of the following:

<xi:include

href="File1.udc"

xpointer="xpointer(//list/*)"

/>

If you’ve written any sourcecode, you’d recognize an #include statement, or an import com.example.*; this is really no different: the document referenced by the “href” (File1.udc) replaces this xi:include statement. The second part, an xpointer="...", further clarifies the import by indicating that only a part of the document we include should come in — in this case, child elements of the “list” element. If you look at a UDC File, you’ll see that “list” is the root node; if that statement makes very little sense, then think of this as “we’re including all the stuff inside the outermost container, but not the container itself”. And hey, look again at the full file above: we specify a <list> and </list> around the inclusions. Coincidence? Not at all; this is a method of avoiding having two outermost root nodes, which cannot be further altered using XML because XML can only have one outermost root node.

…and it’s easier this way: don’t filter out what you can avoid including in the first case. It’s possible that there’s a better set of inclusion elements here, but this works well enough.

If we had three UDCs to merge, you can see that it would merely require another xi:include statement.

To act on this file, we execute xmllint using the “-xinclude” parameter (normal hyphen, only one “-”, not two) as follows. Note that xmllint is available on most non-Windows systems, and should be easily acquired using Microsoft Services for UNIX for a Windows system.

xmllint.exe -xinclude concatenate-UDC.xml > Merged.udc

for Windows, or for non-windows:

xmllint -xinclude concatenate-UDC.xml > Merged.udc

(using “Merged.udc” as a temporary file)

We now have a UDC file with only one outermost “list” element or root element, but it has a few problems:

- Every new UDC starts with Evaluation Order of 1; this is reflected in the UDC, and has to be fixed

- Only one default item should be given: we choose the one from the first file

- We only copy the first file’s definition of the UDC (Metric, set, etc) so the user needs to avoid doing this on two UDCs of different metric/set (illogical UDCs will result)

The first two issues can be fixed in the next step.

Clean the Concatenated Result

XSLT, or XSL Transformations, uses XSL (Extensible Stylesheet Language) to transform XML into a different XML, or even into a simpler form such as straight text or ambiguous markups such as CSV. In general, XSLT can map XML data from one schema to another, convert data from one schema to another, or simply extract elements of data into a text stream.

In our case, we’re using it to remove the redundant parts that will cause VirtualWisdom’s UDC parser to reject the document. There is currently no schema definition, so we have to make best efforts to make the resulting UDC look like one exported from VirtualWisdom.

The XSLT is a bit complex to post here, but it should be available by clicking on the marked-up filename below. Note that like xmllint, xsltproc is widely available as Linux and UNIX packages, or via the Microsoft Services for UNIX (currently a re-packaged Cygwin environment).

We execute the cleanup XSLT as follows:

xsltproc.exe concatenate-UDC.xsl Merged.udc > \VirtualWisdomData\UDCImport\Combined.udc

for Windows, or for non-windows:

xsltproc concatenate-UDC.xsl Merged.udc > \VirtualWisdomData\UDCImport\Combined.udc

Note here that the file we use is similarly-named, but end in “xsl”, not “xml”. Also, we write the file directly into the UDCImport directory of a VirtualWisdomData folder, which is where an import schedule would look for it.

This resulting file can be directly imported; an example import schedule is at the bottom of the Use UDCs to Collect Devices by Name Pattern article presented on May 1st, 2012. As well, because the UDC is in a standard form, it can be used to Quickly Create Filters for use in Dashboards, Reports, and Alarms.

Evolving SANs tend to have evolving naming schemes and assignment methods, so there will often be many different systems of identifiers that can be joined to work with different Business Units, customers, or functional groups; different such groups tend to cause different sources of information to be polled, and different formats to result. I hope this process help you to reduce the manually copying of data attributes which is so prone to human error and scheduling delays.

I hope this helps you to “set it and forget it” on more sources of data. Accurate data drives decisions: how can you methodically fix what you cannot measure and make sense of?